AI Governance and Compliance—Who’s Accountable?

I remember back in 2017, trying to convince organizations that AI was no longer just an option but a necessity. It was a tough sell. Then, in 2022, OpenAI released ChatGPT, and suddenly AI was everywhere—discussed in boardrooms, news articles, and everyday conversations. This surge in interest also brought AI governance into focus, with more organizations questioning how to ensure responsible AI use. Today, AI is deeply embedded in financial systems, influencing credit decisions, fraud detection, and compliance monitoring, making governance more critical than ever.

As AI-driven decision-making becomes more prevalent, so does the question of accountability. Who is responsible when an AI system makes biased decisions or generates false positives that overwhelm compliance teams?

This was the core discussion at the ABCOMP 2025 Annual Conference, where I had the privilege of speaking to a full room of compliance officers at The Peninsula Manila. Through interactive discussions, we explored the complexities of AI governance and why compliance professionals must take a proactive role in AI oversight.

The Credit Scoring Dilemma: Who’s Responsible?

I opened the talk with two hypothetical scenarios. I’d say this was one of the most striking moments of the session. The first scenario:

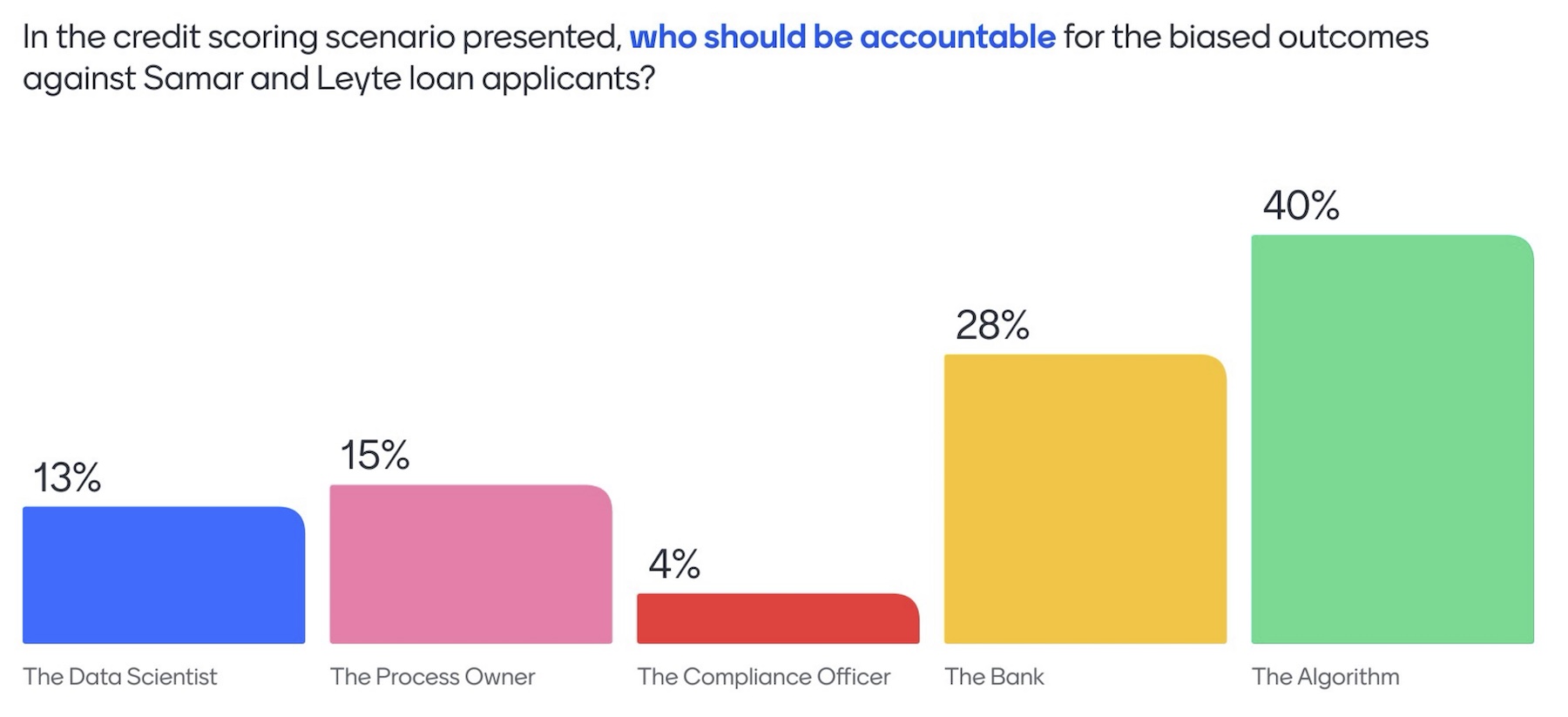

💡 A deployed AI-driven credit scoring system consistently assigns lower scores to loan applicants from Samar and Leyte—not due to financial behavior, but because of historical natural hazards data. Who should be held accountable?

The audience’s responses were revealing:

- 40% said the algorithm

- 28% pointed to the bank

- 15% held the process owner responsible

- Only 4% blamed the compliance officer

The variety in responses revealed a deeper issue: uncertainty. The room was divided because there is no clear-cut answer, reflecting the complexity of AI governance itself. However, this confusion underscores the need for clearer guidelines and standardized frameworks. Compliance officers are already working within an evolving landscape, and AI governance is an area where more structure is required to ensure clarity in accountability.

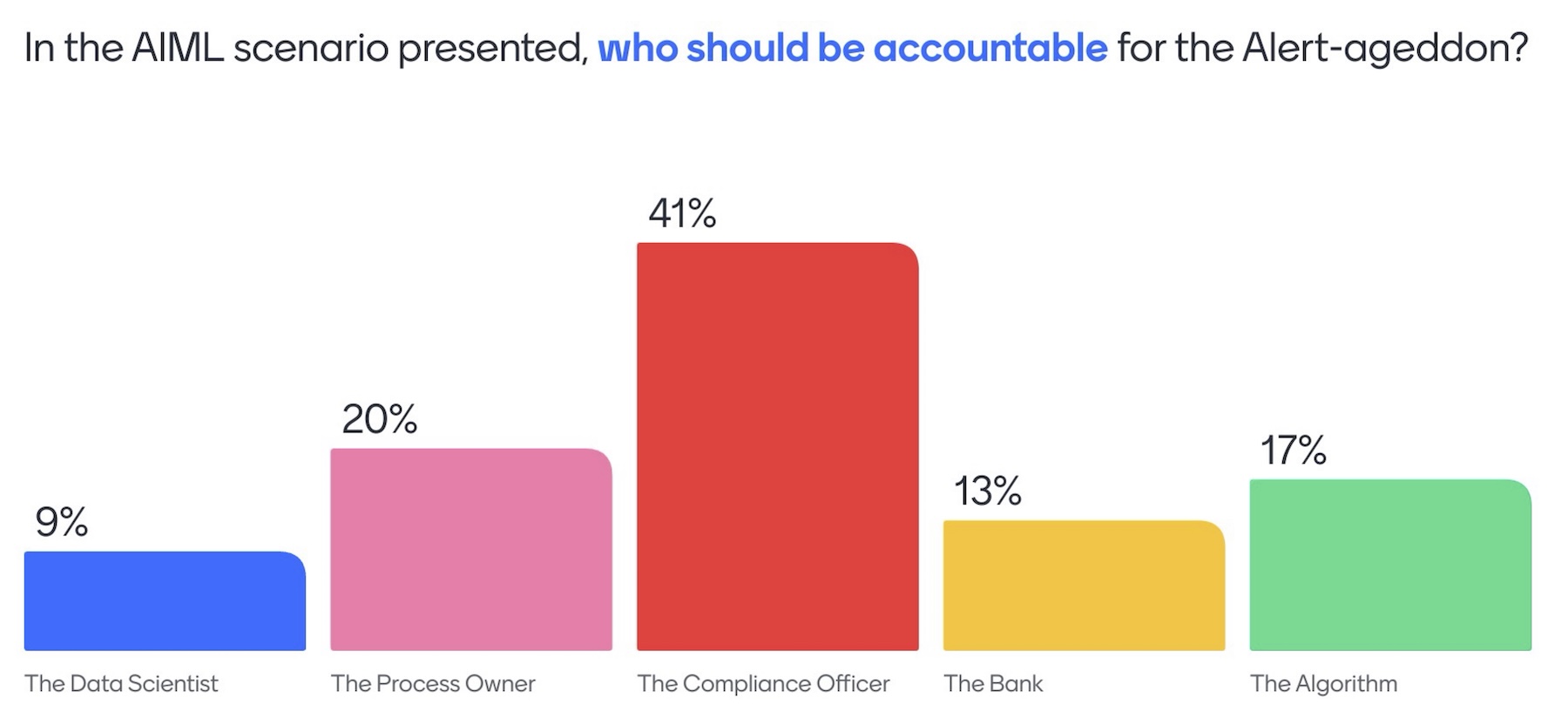

The “Alert-ageddon” Scenario: A Shift in Perception

Before presenting the next hypothetical scenario involving clients in Cebu, I teased the audience by referencing their responses from the credit scoring dilemma. “So, it’s the algorithm??? No compliance officers accountable?” I quipped. There were some chuckles, but also an awareness that this perspective would soon be challenged.

💡 AI-driven transaction monitoring generates an overwhelming number of false positives, leading to compliance teams being flooded with alerts. Who is accountable?

After some more teasing, the audience’s perception shifted. This time, 41% attributed responsibility to compliance officers.

I thought this shift in perspective was significant, highlighting yet another layer of uncertainty. If the AI algorithm is responsible in one case but compliance officers in another, where does the true accountability lie? And what does it even mean for the algorithm or the Bank to be responsible? The inconsistency in responses further illustrates the complexity of these scenarios and the critical need for clearer governance structures. Rather than judging these differing perspectives, we should recognize that they highlight the broader challenge: Adapting, implementing, and harmonizing existing frameworks remains a challenge, especially when familiarity with these standards varies across organizations. Already, various regulatory bodies, industry groups, and institutions have developed governance principles, such as the EU AI Act, OECD AI Principles, and ISO standards. The challenge further lies in aligning these frameworks and translating them into actionable accountability measures within organizations. AI governance must provide structure while remaining flexible enough to support innovation.

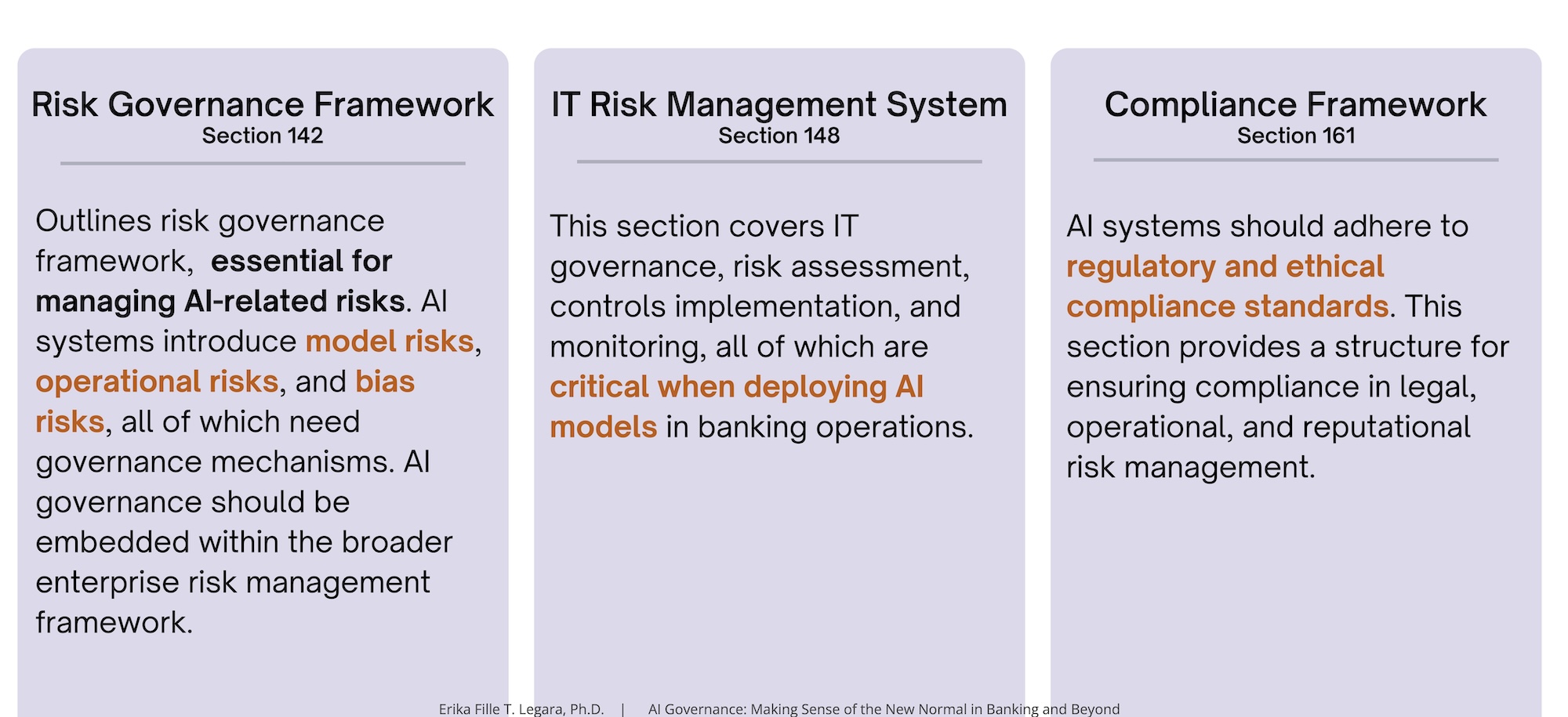

Governance is Already Here: The BSP’s MORB and AI Systems

While some organizations are waiting for specific AI governance regulations, the truth is that financial institutions already have the necessary compliance frameworks in place. To show this, we had a quick look at the Manual of Regulations for Banks (MORB) from the Bangko Sentral ng Pilipinas (BSP), which I argued already includes several existing provisions that can be applied to AI systems (of course, I made good use of an AI-powered tool to navigate the MORB’s 1,348 pages 😉).

“What does this tell us?” I asked the audience.

Waiting for an explicit AI governance circular is unnecessary. Compliance and audit teams should already be applying these existing regulations to AI-driven processes.

Building AI-Ready Compliance Teams

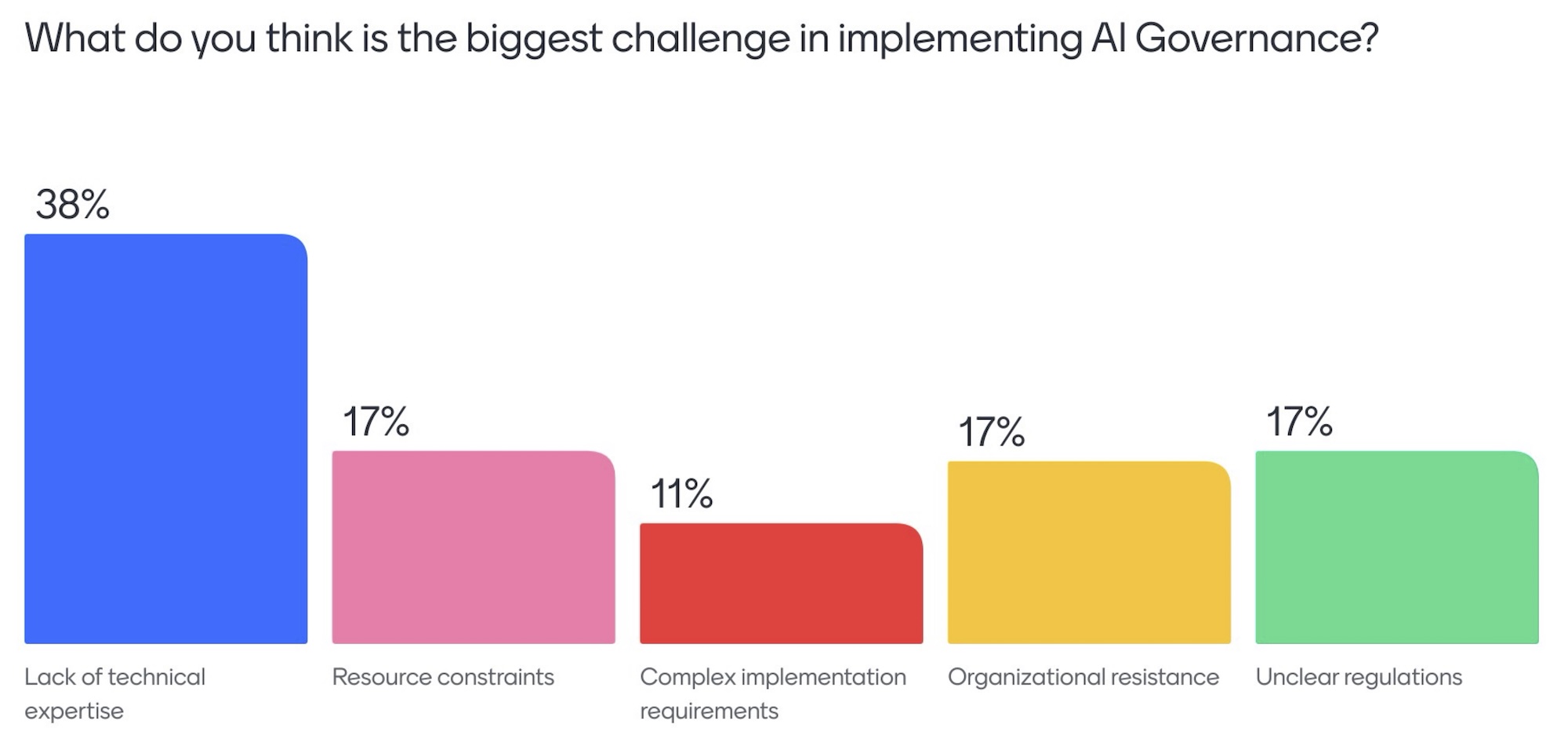

The biggest challenge in implementing AI governance, according to compliance officers at the conference, is a lack of technical expertise (38% of respondents). Other key concerns include resource constraints, complex implementation requirements, and organizational resistance.

To address this, financial institutions must:

✅ Invest in AI literacy training for compliance officers and auditors.

✅ Develop AI risk assessment frameworks based on existing regulatory principles.

✅ Foster collaboration between compliance, risk, IT, and AI teams to ensure holistic oversight.

✅ Create explainability and transparency requirements for AI models to ensure they align with ethical and regulatory standards.

Compliance Officers Must Lead AI Governance

Compliance officers who understand AI will be at the forefront of financial risk and governance. As our discussion at ABCOMP 2025 highlighted, accountability in AI-driven decision-making is still widely debated. Compliance officers cannot afford to be passive observers. They must take an active role in understanding, governing, and ensuring the responsible use of AI within their institutions.

The uncertainty and inconsistency in accountability responses at the conference make one thing clear—there’s still a lot of work to do. This should be recognized as a call for well-defined guidelines and standardized accountability structures. Compliance professionals are navigating uncharted territory, and AI governance must evolve alongside technological advancements.

AI governance should not be seen as an obstacle to innovation but as a foundation that enables responsible progress. When the right governance structures are in place, organizations can confidently innovate while mitigating risks and harms. The principles of AI governance—transparency, accountability, fairness, and security—provide a clear framework. These principles do not need to overcomplicate things; they help ensure that AI remains a tool for progress while safeguarding against unintended consequences.

What steps is your organization taking to integrate AI governance into its compliance framework?