Leveraging Existing Legal Authority to Guide AI Development

May 7, 2025· ,,·

0 min read

,,·

0 min read

E.F. Legara

M. Cerilles Jr.

B.R. Limcumpao

Abstract

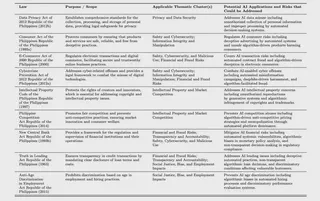

The governance of Artificial Intelligence (AI) presents distinct challenges for nations primarily adopting, rather than developing, these technologies, particularly those lacking AI-specific legislation. Policymakers worldwide, including those in the Philippines, face a balancing act; that is, enabling innovation while safeguarding public interests amidst rapid digital transformation, socioeconomic disparities, and evolving regulatory capacity. This paper presents a pragmatic, risk-based AI governance framework developed in the Philippines, offering a potential model for other AI-adopting nations. We systematically identify key AI-related risks ranging from data privacy violations and algorithmic bias to labor disruptions and cybersecurity threats and map them onto an inventory of fourteen existing Philippine laws spanning data protection, cybercrime, consumer protection, intellectual property, and labor codes. By analyzing the interplay between AI adoption and these established legal instruments, the proposed framework demonstrates how current law can be adaptively reinterpreted and leveraged to mitigate algorithmic harms without initially requiring new statutes. Our findings highlight the adaptability of existing legal structures, arguing this approach fosters more legitimate, institutionally grounded, and practically implementable AI governance within specific socio-legal contexts. While dedicated AI statutes may eventually become a necessity, this study shows existing frameworks offer a transferable blueprint, particularly for developing economies providing a valuable tool for context-aware decision-making to immediately guide AI’s societal integration, aligning technological progress with broader social, ethical, and legal objectives.

Type

Publication

SSRN